Presentation

P24 - How to Build an Energy Dataset for HPC

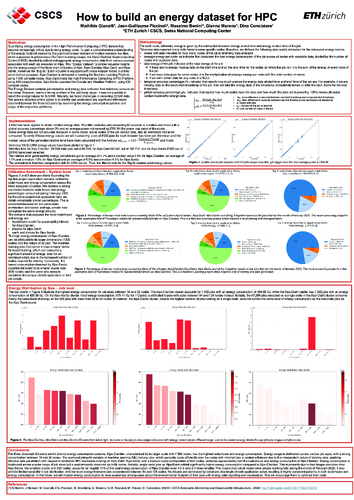

Presenter

DescriptionQuantifying the energy consumption in HPC domain is becoming increasingly critical nowadays, driven by rising energy costs. To gain a comprehensive understanding of the energy footprint created by the significant power demand of modern systems like Alps, which exceeds its predecessor Piz Daint in energy usage, the Swiss National Supercomputing Centre (CSCS) decided to collect and aggregate energy consumption data from various sources associated with each job executed on Alps to create an energy dataset. This dataset is contained in a MySQL database and consists of: - Job Metadata: This includes fields such as job ID, CPU hours consumed, nodes utilized, start time, end time, and elapsed time. This data is sourced from the SLURM workload manager via the jobcompletion plugin component. Energy Metrics: energy consumption data is derived from telemetry sensors installed on Alps supercomputer. These sensors, provided by DMTF’s Redfish® standard , capture raw data then processed to calculate energy consumption for all nodes associated with a job and aggregate the results. SLURM Energy data: added through energy plugin to the Job Metadata. Quality Indicators: Additional fields are included to assess and ensure reliability and accuracy of computed energy consumption metrics. DCGM data: Nvidia GPU collection metrics system.

TimeMonday, June 1610:20 - 10:50 CEST

LocationCampussaal - Plenary Room

Session Chair

Event Type

PASC Poster